Hi, I’m Mehdi Fekih

I specialize in the field of data science, where I analyze and interpret complex data to derive meaningful insights.

What I Can Do For You

Data Science

Unlocking insights and driving business growth through data analysis and visualization as a freelance data scientist.

Data Analysis

Helping businesses make informed decisions through insightful data analysis as a freelance data analyst.

Website Development

Bringing your online presence to life with customized website development solutions as a freelance developer.

Home Automation

Transforming your living space into a smart home with custom home automation solutions as a freelance home automation expert.

Docker & Server

Optimizing your software development and deployment with Docker and server management as a freelance expert

Consultancy

Identification of scope, assessment of feasibility, cleaning and preparation of data, selection of tools and algorithms.

My Portfolio

The world of nutrition is vast and ever-evolving. With a plethora of food products available in the market, making informed choices becomes a challenge. Recognizing this, the agency “Santé publique France” initiated a call for innovative application ideas related to nutrition. We heeded the call and embarked on a journey to develop a unique application that not only provides insights into various food products but also suggests healthier alternatives.

The Inspiration Behind the App

The “Santé publique France” agency, dedicated to public health in France, recognized the need for innovative solutions to address the challenges of modern nutrition. With the vast dataset from Open Food Facts at our disposal, we saw an opportunity to create an application that could make a difference.

Our Streamlit App: Features & Highlights

Our application is designed to be user-friendly and informative:

- Product Insights: Users can select a product and view its comprehensive nutritional information.

- Healthier Alternatives: The app suggests healthier alternatives based on user-defined criteria and the product’s nutritional score.

- Visual Analytics: Throughout the app, users are presented with clear and concise visualizations, making the data easy to understand, even for those new to nutrition.

The Technical Journey

- Data Processing: The Open Food Facts dataset was our primary resource. We meticulously cleaned and processed this data, ensuring its reliability. This involved handling missing values, identifying outliers, and automating these processes for future scalability.

- Univariate & Multivariate Analysis: We conducted thorough analyses to understand individual variables and their interrelationships. This was crucial in developing the recommendation engine for healthier alternatives.

- Application Ideation: Drawing inspiration from real-life scenarios, like David’s sports nutrition focus, Aurélia’s quest for healthier chips, and Sylvain’s comprehensive product rating system, we envisioned an app that catered to diverse user needs.

Deployment with Docker

To ensure our application’s consistent performance across different environments, we turned to Docker. Docker allowed us to containerize our app, ensuring its smooth and consistent operation irrespective of the deployment environment.

Conclusion & Future Prospects

Our Streamlit app is a testament to the power of data-driven solutions in addressing real-world challenges. By leveraging the Open Food Facts dataset and the simplicity of Streamlit, we’ve created an application that empowers users to make informed nutritional choices. As we look to the future, we’re excited about the potential enhancements and the broader impact our app can have on public health.

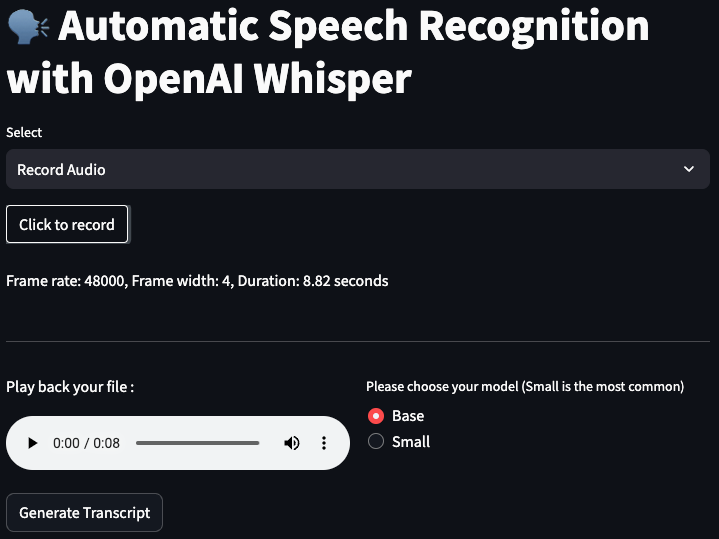

Automatic Speech Recognition: A Streamlit and Whisper Integration

Introduction:

In the ever-evolving landscape of technology, Automatic Speech Recognition (ASR) stands out as a pivotal advancement, turning spoken language into written text. In this article, we delve into a Python script that seamlessly integrates OpenAI’s Whisper ASR with Streamlit, a popular web app framework for Python, to transcribe audio files and present the results in a user-friendly interface.

Script Overview:

The script in focus utilizes several Python libraries, including os, pathlib, whisper, streamlit, and pydub, to create a web application capable of converting uploaded audio files into text transcripts. The application supports a variety of audio formats such as WAV, MP3, MP4, OGG, WMA, AAC, FLAC, and FLV.

Key Components:

- Directory Setup: The script defines three main directories:

UPLOAD_DIRfor storing uploaded audio files,DOWNLOAD_DIRfor saving converted MP3 files, andTRANSCRIPT_DIRfor keeping the generated transcripts. - Audio Conversion: The

convert_to_mp3function is responsible for converting the uploaded audio file into MP3 format, regardless of its original format. This is achieved using a mapping of file extensions to corresponding conversion methods provided by thepydublibrary. - Transcription Process: The

transcribe_audiofunction leverages OpenAI’s Whisper ASR model to transcribe the converted audio file. Users have the option to choose the model type (Tiny, Base, Small) for transcription. - Transcript Storage: The

write_transcriptfunction writes the generated transcript to a text file, stored in theTRANSCRIPT_DIR. - User Interface: Streamlit is employed to create an intuitive user interface, allowing users to upload audio files, choose the ASR model, generate transcripts, and download the results. The interface also provides playback functionality for the uploaded audio file.

Usage:

- Uploading Audio File: Users can upload their audio file through the Streamlit interface, where they are prompted to choose the file and the ASR model type.

- Generating Transcript: Upon clicking the “Generate Transcript” button, the script processes the audio file, transcribes it using the selected Whisper model, and displays a formatted transcript in a toggleable section.

- Downloading Transcript: Users have the option to download the generated transcript as a text file directly from the application.

Conclusion:

This innovative script exemplifies the integration of Automatic Speech Recognition technology with web applications, offering a practical solution for transcribing audio files. By combining the capabilities of OpenAI’s Whisper and Streamlit, it provides a versatile tool that caters to a wide range of audio formats and user preferences. Whether for academic research, content creation, or accessibility, this application stands as a testament to the boundless possibilities of ASR technology in enhancing digital communication.

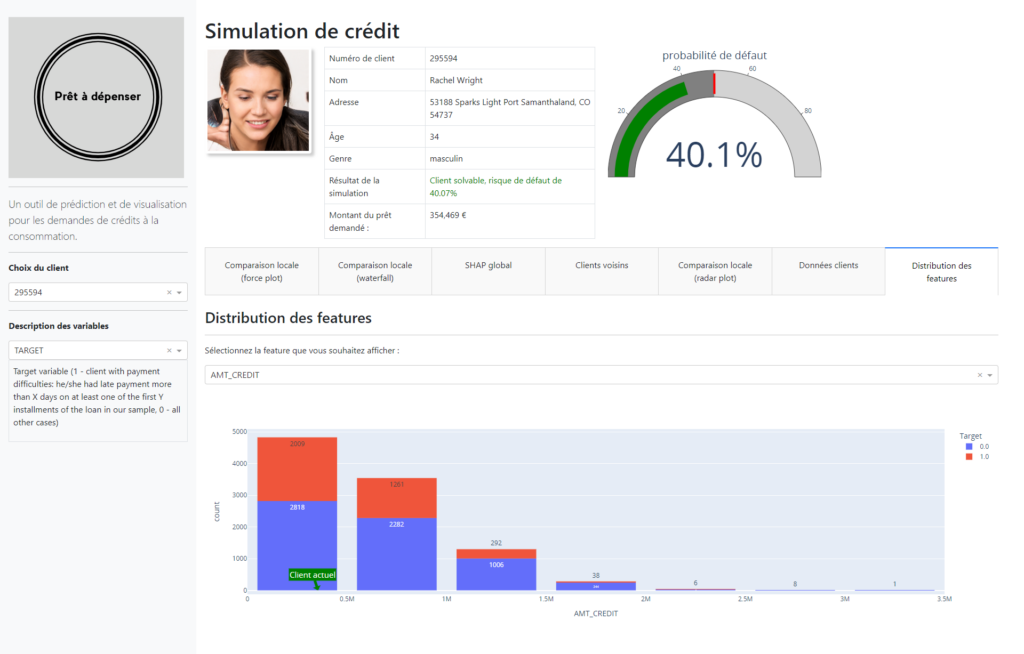

Credit Scoring Project for Credit Match

Background: In the face of the rapidly evolving financial market and the increasing demand for transparency from clients, Credit Match sought our expertise to develop an innovative credit scoring solution. The goal was twofold: to optimize the credit granting decision process and to enhance client relations through transparent communication.

Proposed Solution:

- Automated Scoring Model: We crafted an advanced classification algorithm that leverages a myriad of data sources, including behavioral data and information from third-party financial entities. This algorithm is adept at accurately predicting the likelihood of a client repaying their credit.

- Interactive Dashboard: Addressing the need for transparency, we designed an interactive dashboard tailored for client relationship managers. This dashboard not only elucidates credit granting decisions but also provides clients with easy access to their personal information.

Technologies Deployed:

- Analysis and Modeling: We utilized Kaggle kernels to facilitate exploratory analysis, data preparation, and feature engineering. These kernels were adapted to meet the specific needs of Credit Match.

- Dashboard: Based on the provided specifications, we chose [Dash/Bokeh/Streamlit] to develop the interactive dashboard.

- MLOps: To ensure regular and efficient model updates, we implemented an MLOps approach, relying on Open Source tools.

- Data Drift Detection: The evidently library was integrated to anticipate and detect any future data discrepancies, thus ensuring the model’s long-term robustness.

Deployment: The solution was deployed on [Azure webapp/PythonAnywhere/Heroku], ensuring optimal availability and performance.

Results: Our model demonstrated outstanding performance, with an AUC exceeding 0.82. However, we took precautions to avoid any overfitting. Moreover, considering Credit Match’s business specifics, we optimized the model to minimize costs associated with prediction errors.

Documentation: A detailed technical note was provided to Credit Match, allowing for transparent sharing of our approach, from model conception to Data Drift analysis.

Analysis of Data from Education Systems

Introduction

This project is an analysis of data from education systems, aimed at providing insights into the trends and patterns of the education sector. The data is sourced from various educational institutions, and the project aims to extract meaningful information from this data to inform education policies and practices.

Getting Started

To get started with this project, you will need to have a basic understanding of data analysis and data visualization tools. The project is written in Python, and you will need to have Python 3.x installed on your system.

You can download the source code from the GitHub repository and install the necessary dependencies using pip. Once you have installed the dependencies, you can run the project using the command line.

Project Structure

The project is organized into several directories, each of which contains code related to a specific aspect of the project. The directories are as follows:

data: contains the data used in the project.notebooks: contains Jupyter notebooks used for data analysis and visualization.scripts: contains Python scripts used for data preprocessing and analysis.reports: contains reports generated from the data analysis.

Leveraging NLP for PDF Content Q&A with Streamlit and OpenAI

Introduction

In the vast ocean of unstructured data, PDFs stand out as one of the most common and widely accepted formats for sharing information. From research papers to company reports, these files are ubiquitous. But with the ever-growing volume of information, navigating and extracting relevant insights from these documents can be daunting. Enter our recent project: a Streamlit application leveraging OpenAI to answer questions about the content of uploaded PDFs. In this article, we’ll dive into the technicalities and the exciting outcomes of this endeavor.

The Challenge

While PDFs are great for preserving the layout and formatting of documents, extracting and processing their content programmatically can be challenging. Our goal was simple but ambitious: develop an application where users can upload a PDF and then ask questions related to its content, receiving relevant answers in return.

The Stack

- Streamlit: A fast, open-source tool that allows developers to create machine learning and data applications in a breeze.

- OpenAI: Leveraging the power of NLP models for text embeddings and semantic understanding.

- PyPDF2: A Python library to extract text from PDF files.

- langchain (custom modules): For text splitting, embeddings, and more.

The Process

- PDF Upload and Text Extraction:

Once a user uploads a PDF, we usePyPDF2to extract its text content, ensuring the preservation of the sequence of words. - Text Splitting:

Given that PDFs can be extensive, we implemented theCharacterTextSplitterfromlangchainto break down the text into manageable chunks. This modular approach ensures efficiency and high-quality results in the subsequent steps. - Text Embedding:

We employedOpenAIEmbeddingsfromlangchainto convert these chunks of text into vector representations. These embeddings capture the semantic essence of the text, paving the way for accurate similarity searches. - Building the Knowledge Base:

UsingFAISSfromlangchain, we constructed a knowledge base from the embeddings of the chunks, ensuring a swift and efficient retrieval process. - User Q&A:

With the knowledge base in place, users can pose questions about the uploaded PDF. By performing a similarity search within our knowledge base, we retrieve the most relevant chunks corresponding to the user’s query. - Answer Extraction:

Leveraging OpenAI, we implemented a question-answering mechanism, providing users with precise answers to their questions based on the content of the PDF.

Outcomes and Reflections

The Streamlit application stands as a testament to the power of combining user-friendly interfaces with potent NLP capabilities. While our project showcases significant success in answering questions about the content of a wide range of PDFs, there are always challenges:

- Quality of Text Extraction: Some PDFs, especially those with images, tables, or non-standard fonts, may not yield perfect text extraction results.

- Handling Large Documents: For exceedingly long PDFs, further optimizations may be required to maintain real-time processing.

Future Directions

- Incorporate OCR (Optical Character Recognition): To handle PDFs that contain images with embedded text.

- Expand to Other File Types: Venturing beyond PDFs to support other formats like DOCX or PPT.

- Advanced Models: Exploring more advanced models from OpenAI or even fine-tuning models for specific domain knowledge.

My Blog

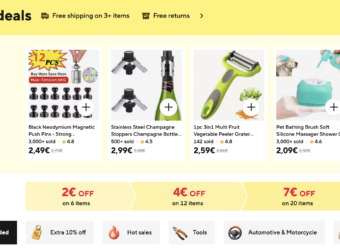

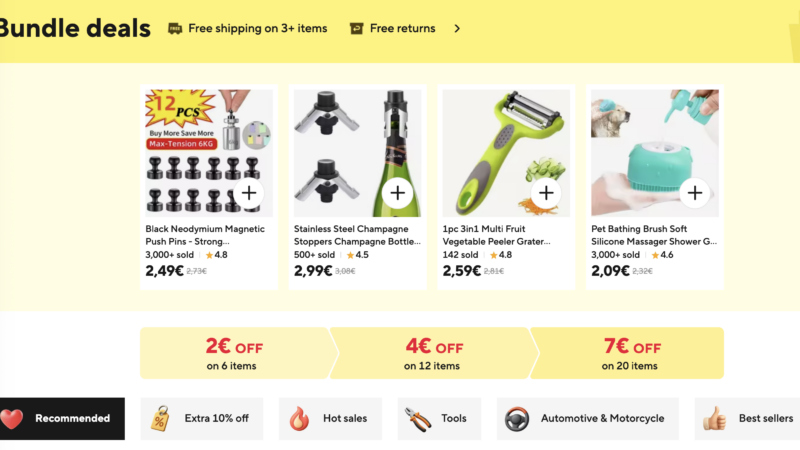

AliExpress Bundle Bypass

AliExpress Bundle Bypass

Marre des “Bundle Deals” AliExpress qui vous forcent à acheter plusieurs articles ? Ce script gratuit intercepte ces liens et vous redirige directement vers la page produit individuelle.

Le problème des Bundle Deals

AliExpress pousse de plus en plus les acheteurs vers des “Bundle Deals” — des offres groupées qui semblent attractives mais vous empêchent d’acheter un seul article au prix unitaire. Quand vous cliquez sur un produit depuis les résultats de recherche, vous atterrissez sur une page intermédiaire qui vous force à ajouter d’autres articles.

Le lien ressemble à ça :

aliexpress.com/ssr/300000512/BundleDeals2?productIds=1005008154245742...Au lieu du lien direct vers le produit :

aliexpress.com/item/1005008154245742.htmlLa solution : AliExpress Bundle Bypass

Ce userscript détecte automatiquement les liens “Bundle Deals” et les convertit en liens directs vers la page produit. Plus besoin de chercher comment contourner ces pages, le script fait tout le travail.

Fonctionnalités

- Redirection automatique — Si vous atterrissez sur une page Bundle, vous êtes immédiatement redirigé vers le produit

- Conversion des liens — Les liens Bundle dans les résultats de recherche sont réécrits en temps réel

- Interception des clics — Même si un lien n’a pas été converti, le clic est intercepté et redirigé

- Panel de debug — Un indicateur visuel montre combien de liens ont été détectés et convertis

- Indicateurs visuels — Les liens convertis sont entourés en vert pour confirmation

Installation

Le script fonctionne avec un gestionnaire de userscripts. Voici comment l’installer selon votre navigateur :

🟡 Google Chrome

- Installez l’extension Tampermonkey depuis le Chrome Web Store

- Cliquez sur l’icône Tampermonkey dans la barre d’extensions

- Sélectionnez “Create a new script…”

- Supprimez le contenu par défaut

- Collez le code du script (voir ci-dessous)

- Appuyez sur Ctrl+S pour sauvegarder

- Rafraîchissez AliExpress — le panel debug doit apparaître en haut à droite

🟠 Mozilla Firefox

- Installez l’extension Violentmonkey ou Greasemonkey depuis les modules Firefox

- Cliquez sur l’icône de l’extension

- Cliquez sur le “+” pour créer un nouveau script

- Collez le code du script

- Sauvegardez avec Ctrl+S

- Testez sur AliExpress

🔵 Microsoft Edge

- Installez Tampermonkey pour Edge depuis le Microsoft Edge Add-ons store

- Cliquez sur l’icône Tampermonkey

- Sélectionnez “Create a new script…”

- Collez le code et sauvegardez

🦁 Brave Browser

Brave est basé sur Chromium, la procédure est identique à Chrome. Installez Tampermonkey depuis le Chrome Web Store (compatible avec Brave).

Le code du script

Copiez l’intégralité du code ci-dessous :

// ==UserScript==

// @name AliExpress Bundle Bypass

// @namespace https://github.com/mehdi

// @version 3.1.0

// @description Bypass bundle deals and go directly to product pages

// @author Mehdi

// @match *://*.aliexpress.com/*

// @match *://*.aliexpress.ru/*

// @match *://*.aliexpress.us/*

// @grant GM_addStyle

// @run-at document-end

// ==/UserScript==

(function() {

'use strict';

const processedLinks = new WeakSet();

let isScanning = false;

let scanTimeout = null;

let scannedCount = 0;

let foundCount = 0;

let convertedCount = 0;

// Debug panel

function createDebugPanel() {

const panel = document.createElement('div');

panel.id = 'bundle-bypass-debug';

panel.innerHTML = `

<div style="

position: fixed;

top: 10px;

right: 10px;

background: #1a1a2e;

color: #0f0;

font-family: monospace;

font-size: 12px;

padding: 10px 15px;

border-radius: 8px;

z-index: 999999;

max-width: 350px;

box-shadow: 0 4px 20px rgba(0,255,0,0.3);

border: 1px solid #0f0;

">

<div style="font-weight: bold; margin-bottom: 8px; color: #0ff;">

⚡ BUNDLE BYPASS v3.1

</div>

<div id="bb-status">Active ✓</div>

<div id="bb-stats" style="margin-top: 8px;">

Scanned: <span id="bb-scanned">0</span> |

Bundles: <span id="bb-found">0</span> |

Fixed: <span id="bb-converted">0</span>

</div>

<button id="bb-close" style="position: absolute; top: 5px; right: 10px; background: none; border: none; color: #f00; cursor: pointer;">✕</button>

</div>

`;

return panel;

}

function updateStats() {

const el1 = document.getElementById('bb-scanned');

const el2 = document.getElementById('bb-found');

const el3 = document.getElementById('bb-converted');

if (el1) el1.textContent = scannedCount;

if (el2) el2.textContent = foundCount;

if (el3) el3.textContent = convertedCount;

}

// Redirect if on bundle page

const currentUrl = window.location.href;

if (currentUrl.includes('BundleDeals')) {

const match = currentUrl.match(/productIds=(\d+)/);

if (match) {

window.location.replace(`https://www.aliexpress.com/item/${match[1]}.html`);

return;

}

}

function extractProductId(url) {

let match = url.match(/productIds=(\d+)/);

if (match) return match[1];

match = url.match(/x_object_id[%3A:]+(\d+)/i);

if (match) return match[1];

return null;

}

function scanLinks() {

if (isScanning) return;

isScanning = true;

document.querySelectorAll('a[href*="BundleDeals"]').forEach(link => {

if (processedLinks.has(link)) return;

processedLinks.add(link);

scannedCount++;

const productId = extractProductId(link.href);

if (productId) {

foundCount++;

link.dataset.originalBundle = link.href;

link.href = `https://www.aliexpress.com/item/${productId}.html`;

link.classList.add('bb-converted');

convertedCount++;

}

});

updateStats();

isScanning = false;

}

function debouncedScan() {

if (scanTimeout) clearTimeout(scanTimeout);

scanTimeout = setTimeout(scanLinks, 200);

}

// Click interceptor (fallback)

document.addEventListener('click', function(e) {

const link = e.target.closest('a');

if (!link || !link.href.includes('BundleDeals')) return;

const productId = extractProductId(link.href);

if (productId) {

e.preventDefault();

e.stopPropagation();

const url = `https://www.aliexpress.com/item/${productId}.html`;

link.target === '_blank' ? window.open(url, '_blank') : window.location.href = url;

}

}, true);

// Observer

const observer = new MutationObserver(mutations => {

if (mutations.some(m => m.target.closest('#bundle-bypass-debug'))) return;

debouncedScan();

});

// CSS

const style = document.createElement('style');

style.textContent = `

a.bb-converted { outline: 3px solid #0f0 !important; outline-offset: 2px !important; }

a[href*="BundleDeals"]:not(.bb-converted) { outline: 3px dashed #ff0 !important; }

`;

document.head.appendChild(style);

// Init

function init() {

document.body.appendChild(createDebugPanel());

document.getElementById('bb-close').onclick = () =>

document.getElementById('bundle-bypass-debug').remove();

scanLinks();

observer.observe(document.body, { childList: true, subtree: true });

let polls = 0;

const id = setInterval(() => { scanLinks(); if (++polls >= 5) clearInterval(id); }, 2000);

}

document.readyState === 'loading'

? document.addEventListener('DOMContentLoaded', init)

: init();

})();Utilisation

Une fois installé, le script fonctionne automatiquement sur toutes les pages AliExpress. Vous verrez :

- Un panel de debug en haut à droite de l’écran avec les statistiques en temps réel

- Un contour vert autour des liens qui ont été convertis avec succès

- Un contour jaune pointillé autour des liens Bundle non encore traités (rare)

Vous pouvez fermer le panel debug en cliquant sur le ✕ rouge. Le script continuera de fonctionner en arrière-plan.

Dépannage

Le panel debug n’apparaît pas

- Vérifiez que le script est activé dans Tampermonkey/Violentmonkey

- Rafraîchissez la page avec Ctrl+F5

- Vérifiez que le script matche bien le domaine (aliexpress.com)

Les compteurs restent à 0

C’est normal si la page actuelle ne contient aucun lien Bundle. Faites une recherche de produit pour voir le script en action.

AliExpress a changé son code

AliExpress modifie régulièrement son interface. Si le script ne fonctionne plus, ouvrez la console développeur (F12) et vérifiez si les liens Bundle utilisent toujours le pattern BundleDeals dans l’URL. Si le format a changé, le script devra être mis à jour.

Désactiver le mode debug

Si le panel vous gêne, vous pouvez commenter ou supprimer la section createDebugPanel() et la ligne document.body.appendChild(createDebugPanel()) dans la fonction init().

Script testé sur Chrome 120+, Firefox 121+, Edge 120+ et Brave 1.61+. Dernière mise à jour : janvier 2025.

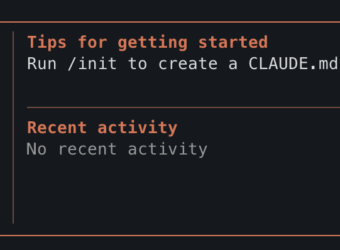

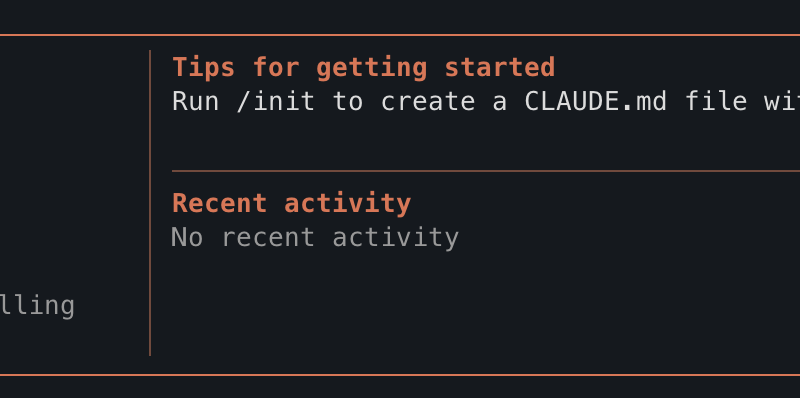

Claude Code + Ollama : le rêve du coding local gratuit se heurte à la réalité

TL;DR : Ollama v0.14.0 permet enfin d’utiliser Claude Code avec des modèles locaux. Sur le papier, c’est révolutionnaire. En pratique, les modèles open source ne sont pas encore à la hauteur pour le workflow agentic.

L’annonce qui a fait saliver les devs

Mi-janvier 2026, Ollama a annoncé le support natif de l’API Anthropic. Traduction : Claude Code, l’outil de coding agentic d’Anthropic, peut désormais tourner sur des modèles locaux comme Qwen, Mistral ou LLaMA. Fini les tokens facturés à l’usage, fini l’envoi de code propriétaire dans le cloud. Le Graal du développeur privacy-conscious.

J’ai voulu tester. Voici ce que j’ai appris.

Le setup (qui fonctionne)

Prérequis : Ollama v0.14.0 minimum. Si vous êtes sur Mac :

brew upgrade ollama

brew services restart ollama

ollama --version # doit afficher 0.14.0+Ensuite, deux variables d’environnement suffisent :

export ANTHROPIC_AUTH_TOKEN=ollama

export ANTHROPIC_BASE_URL=http://localhost:11434Et on lance Claude Code avec le modèle de son choix :

ollama pull qwen3-coder

claude --model qwen3-coderOllama recommande des modèles avec au moins 32K tokens de context window. Qwen3-coder et gpt-oss:20b sont les plus adaptés.

Là où ça coince

Claude Code n’est pas un simple chatbot. C’est un agent qui utilise des tool calls pour lire des fichiers, exécuter du code, naviguer dans une codebase. Le problème : les modèles open source ne maîtrisent pas parfaitement le format de tool-use d’Anthropic.

Concrètement, voici ce que j’ai observé avec qwen2.5-coder:7b :

❯ fais un hello world

⏺ {"name": "Skill", "arguments": {"skill": "console", "args": "Hello, World!"}}Le modèle essaie d’émettre un tool call, mais il balance du JSON brut au lieu de l’exécuter. Avec qwen3-coder (plus gros), le /init a pris 4 minutes pour… ne pas créer le fichier CLAUDE.md attendu. Avec gpt-oss:20b, j’ai obtenu “Invalid tool parameters”.

Le pattern est clair : les modèles locaux comprennent vaguement ce qu’on leur demande, mais ils ne structurent pas leurs réponses comme Claude le ferait nativement.

Pourquoi c’était prévisible

Claude Code a été conçu pour Claude. Son system prompt, son schéma de tools, ses conventions de réponse — tout est calibré pour les modèles d’Anthropic. Quand vous branchez un modèle tiers, il doit :

- Comprendre un system prompt massif (plusieurs milliers de tokens)

- Respecter un format de tool call très spécifique

- Raisonner sur plusieurs étapes (planification, exécution, vérification)

- Gérer un context window qui grossit à chaque interaction

Les modèles 7B-20B n’ont tout simplement pas la capacité cognitive pour jongler avec tout ça. Même les 32B+ galèrent sur les workflows complexes.

Les alternatives qui fonctionnent mieux

Si vous voulez du coding assisté local, plusieurs options sont plus matures :

- Continue (extension VS Code) : conçu dès le départ pour les modèles locaux

- Cline : agent de coding qui supporte nativement Ollama

- aider : CLI de pair programming, excellent avec GPT-4 mais fonctionne aussi en local

- OpenCode : alternative open source à Claude Code, pensée pour être provider-agnostic

Ces outils ont des prompts et des workflows adaptés aux limitations des modèles open source.

Le verdict

L’intégration Ollama + Claude Code est une avancée technique réelle. Pour la première fois, on peut faire tourner l’agent d’Anthropic sans envoyer une seule requête à leurs serveurs. Mais l’expérience est dégradée : lenteur, tool calls mal formatés, workflows qui plantent.

Mon conseil : si vous avez un abonnement Claude Pro ou un budget API, restez sur Claude natif pour le travail sérieux. Utilisez les alternatives locales pour l’expérimentation, les projets perso, ou les environnements air-gapped.

La promesse du coding agentic 100% local est là. Les modèles ne le sont pas encore.

Testé sur Mac avec Ollama 0.14.0, Claude Code v2.1.15, qwen2.5-coder:7b, qwen3-coder et gpt-oss:20b.

Evaluating iSCSI vs NFS for Proxmox Storage: A Comparative Analysis

In the realm of virtualization, selecting the appropriate storage protocol is crucial for achieving optimal performance and efficiency. Proxmox VE, a prominent open-source platform for virtualization, offers a variety of storage options for Virtual Machines (VMs) and containers. Among these, iSCSI and NFS stand out as popular choices. This article aims to delve into the strengths and weaknesses of both iSCSI and NFS within the context of Proxmox storage, providing insights to help IT professionals make informed decisions.

Introduction to iSCSI and NFS

iSCSI (Internet Small Computer Systems Interface) is a storage networking standard that enables the transport of block-level data over IP networks. It allows clients (initiators) to send SCSI commands to SCSI storage devices (targets) on remote servers. iSCSI is known for its block-level access, providing the illusion of a local disk to the operating system.

NFS (Network File System), on the other hand, is a distributed file system protocol allowing a user on a client computer to access files over a network in a manner similar to how local storage is accessed. NFS operates at the file level, providing shared access to files and directories.

Performance Considerations

When evaluating iSCSI and NFS for Proxmox storage, performance is a critical factor.

iSCSI offers the advantage of block-level storage, which generally translates to faster write and read speeds compared to file-level storage. This can be particularly beneficial for applications requiring high I/O performance, such as databases. However, the performance of iSCSI can be heavily dependent on network stability and configuration. Proper tuning and a dedicated network for iSCSI traffic can mitigate potential bottlenecks.

NFS might not match the raw speed of iSCSI in block-level operations, but its simplicity and efficiency in handling file operations make it a strong contender. NFS v4.1 and later versions introduce performance enhancements and features like pNFS (parallel NFS) that can significantly boost throughput and reduce latency in environments with high-demand file operations.

Scalability and Flexibility

NFS shines in terms of scalability and flexibility. Being a file-based storage, NFS allows for easier management, sharing, and scaling of files across multiple clients. Its stateless protocol simplifies recovery from network disruptions. For environments requiring seamless access to shared files or directories, NFS is often the preferred choice.

iSCSI, while scalable, may require more intricate management as the storage needs grow. Each iSCSI target appears as a separate disk, and managing multiple disks across various VMs can become challenging. However, iSCSI’s block-level access provides a high degree of flexibility in terms of partitioning, file system choices, and direct VM disk access, which can be crucial for certain enterprise applications.

Ease of Setup and Management

From an administrative perspective, the ease of setup and ongoing management is another vital consideration.

NFS is generally perceived as easier to set up and manage, especially in Linux-based environments like Proxmox. The configuration is straightforward, and mounting NFS shares on VMs can be accomplished with minimal effort. This ease of use makes NFS an attractive option for smaller deployments or scenarios where IT resources are limited.

iSCSI requires a more detailed setup process, including configuring initiators, targets, and often dealing with CHAP authentication for security. While this complexity can be a barrier to entry, it also allows for fine-grained control over storage access and security, making iSCSI a robust choice for larger, security-conscious deployments.

Security and Reliability

Security and reliability are paramount in any storage solution.

iSCSI supports robust security features like CHAP authentication and can be integrated with IPsec for encrypted data transfer, providing a secure storage solution. The block-level access of iSCSI, while efficient, means that corruption on the block level can have significant repercussions, necessitating strict backup and disaster recovery strategies.

NFS, being file-based, might have more inherent vulnerabilities, especially in open networks. However, NFSv4 introduced improved security features, including Kerberos authentication and integrity checking. File-level storage also means that corruption is usually limited to individual files, potentially reducing the impact of data integrity issues.

Conclusion

Choosing between iSCSI and NFS for Proxmox storage depends on various factors, including performance requirements, scalability needs, administrative expertise, and security considerations. iSCSI offers high performance and fine-grained control suitable for intensive applications and large deployments. NFS, with its ease of use, flexibility, and file-level operations, is ideal for environments requiring efficient file sharing and simpler management. Ultimately, the decision should be guided by the specific needs and constraints of the deployment environment, with a thorough evaluation of both protocols’ strengths and weaknesses.

https://www.youtube.com/watch?v=2HfckwJOy7A

How to Resolve Proxmox VE Cluster Issues by Temporarily Stopping Cluster Services

- If you’re managing a Proxmox VE cluster, you might occasionally encounter situations where changes to cluster configurations become necessary, such as modifying the Corosync configuration or addressing synchronization issues. One effective method to safely make these changes involves temporarily stopping the cluster services. In this article, we’ll walk you through a solution that involves stopping the

pve-clusterandcorosyncservices, and starting the Proxmox configuration filesystem daemon in local mode.

Understanding the Components

Before diving into the solution, it’s crucial to understand the role of each component:

- pve-cluster: This service manages the Proxmox VE cluster’s configurations and coordination, ensuring that all nodes in the cluster are synchronized.

- corosync: The Corosync Cluster Engine provides the messaging and membership services that form the backbone of the cluster, facilitating communication between nodes.

- pmxcfs (Proxmox Cluster File System): This is a database-driven file system designed for storing cluster configurations. It plays a critical role in managing the cluster’s shared configuration data.

Step-by-Step Solution

When you need to make changes to your cluster configurations, follow these steps to ensure a safe and controlled update environment:

- Prepare for Maintenance: Notify any users of the impending maintenance and ensure that you have a backup of all critical configuration files. It’s always better to be safe than sorry.

- Stop the pve-cluster Service: Begin by stopping the

pve-clusterservice to halt the synchronization process across the cluster. This can be done by executing the following command in your terminal:systemctl stop pve-cluster - Stop the corosync Service: Next, stop the Corosync service to prevent any cluster membership updates while you’re making your changes. Use this command:

systemctl stop corosync - Start pmxcfs in Local Mode: With the cluster services stopped, you can now safely start the

pmxcfsin local mode using the-lflag. This allows you to work on the configuration files without immediate propagation to other nodes. - Make Your Changes: With

pmxcfsrunning in local mode, proceed to make the necessary changes to your cluster configuration files. Remember, any modifications made during this time should be carefully considered and double-checked for accuracy. - Restart the Services: Once your changes are complete and verified, restart the

corosyncandpve-clusterservices to re-enable the cluster functionality.systemctl start corosync systemctl start pve-cluster - Verify Your Work: After the services are back up, it’s essential to verify that your changes have been successfully applied and that the cluster is functioning as expected. Use Proxmox VE’s built-in diagnostic tools and commands to check the cluster’s status.

Conclusion

Modifying cluster configurations in a Proxmox VE environment can be a delicate process, requiring careful planning and execution. By temporarily stopping the pve-cluster and corosync services and leveraging the local mode of pmxcfs, you gain a controlled environment to make and apply your changes safely. Always ensure that you have backups of your configuration files before proceeding and thoroughly test your changes to avoid unintended disruptions to your cluster’s operation.

Remember, while this method can be effective for various configuration changes, it’s crucial to consider the specific needs and architecture of your cluster. When in doubt, consult with Proxmox VE documentation or seek assistance from the community or professional support.

AWS – Key Differences Between Network Access Control Lists (NACLs) and Security Groups

In the realm of cloud computing, safeguarding your resources against unauthorized access is paramount. Two pivotal components that play a crucial role in this security paradigm are Network Access Control Lists (NACLs) and Security Groups. Although both serve the purpose of regulating access to network resources, they operate at different levels and have distinct functionalities. This article delves into the core differences between NACLs and Security Groups to help you better understand their roles and applications in cloud security.

What are NACLs?

Network Access Control Lists (NACLs) act as a firewall for controlling traffic at the subnet level within a Virtual Private Cloud (VPC). They provide a layer of security that controls both inbound and outbound traffic at the network layer. NACLs work by evaluating traffic based on rules that either allow or deny traffic entering or exiting a subnet. These rules are evaluated in order, and the first rule that matches the traffic determines whether it’s allowed or denied.

What are Security Groups?

Security Groups, on the other hand, function as virtual firewalls for individual instances or resources. They control inbound and outbound traffic at the instance level, ensuring that only the specified traffic can reach the resource. Unlike NACLs, Security Groups evaluate all rules before deciding, and if any rule allows the traffic, it is permitted.

Key Differences

-

Level of Application:

- NACLs: Operate at the subnet level, affecting all resources within that subnet.

- Security Groups: Applied directly to instances, providing granular control over individual resources.

-

Statefulness:

- NACLs: Stateless, meaning responses to allowed inbound traffic are subject to outbound rules, and vice versa.

- Security Groups: Stateful, allowing responses to allowed inbound traffic without requiring an outbound rule.

-

Rule Evaluation:

- NACLs: Process rules in a numbered order, with the first match determining the action.

- Security Groups: Evaluate all rules before deciding, allowing traffic if any rule permits it.

-

Default Behavior:

- NACLs: By default, deny all inbound and outbound traffic until rules are configured to allow traffic.

- Security Groups: Typically allow all outbound traffic and deny all inbound traffic by default, until specific allow rules are added.

-

Use Cases:

- NACLs: Ideal for broad, subnet-level rules, like blocking a specific IP range from accessing any resources in a subnet.

- Security Groups: Best suited for more granular, resource-specific rules, such as allowing web traffic to a web server but not to other types of instances.

Conclusion

Understanding the differences between NACLs and Security Groups is crucial for effectively managing network security in a cloud environment. While NACLs offer a first line of defense at the subnet level, Security Groups provide more granular control at the instance level. By leveraging both in your security strategy, you can ensure a robust defense-in-depth approach to securing your cloud resources.

Remember, the optimal use of NACLs and Security Groups depends on your specific security requirements and network architecture. It’s essential to carefully plan and implement these components to achieve the desired security posture for your cloud environment.

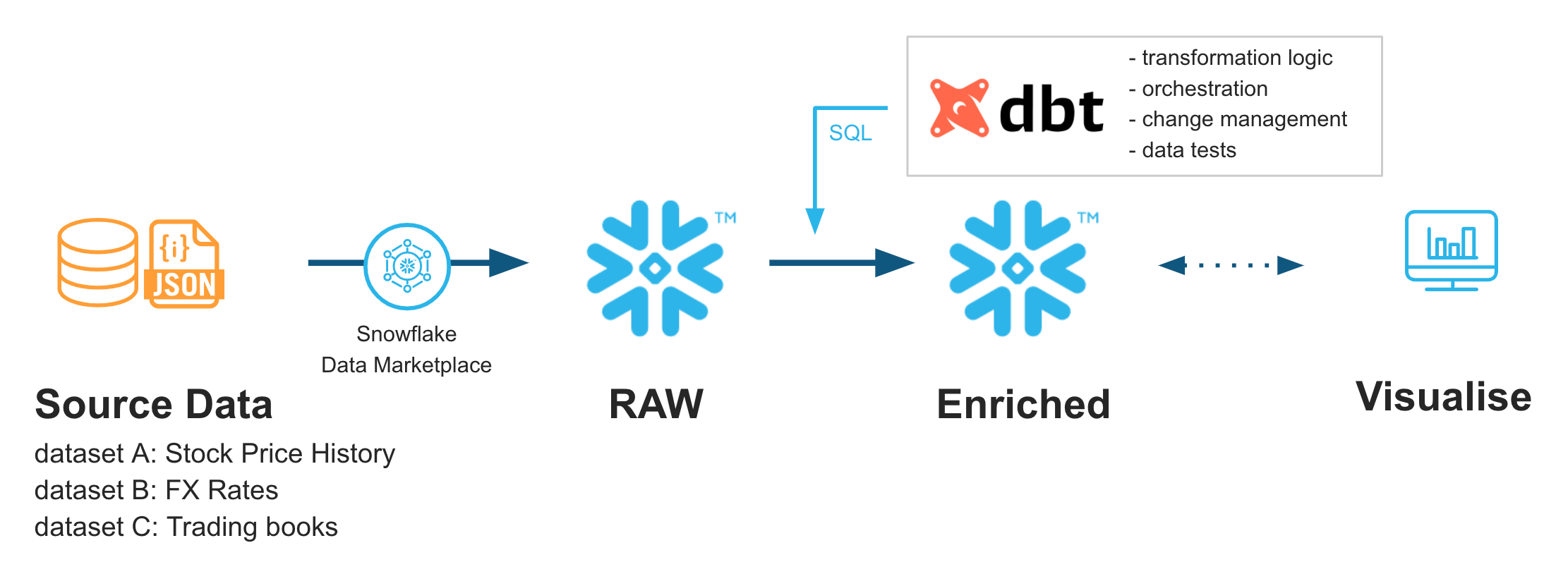

Unlocking Data Transformation with DBT: A Comprehensive Guide

Introduction:

In an era where data is akin to digital gold, the ability to refine this raw resource into actionable insights is crucial for any business aiming for success. DBT (Data Build Tool) emerges as a beacon of efficiency in the vast sea of data transformation tools, providing a structured, collaborative, and version-controlled environment for data analysts and engineers.

Illustration Suggestion: A visually striking image of a data landscape with a beam of light transforming raw data into a structured, golden dataset, symbolizing the enlightening power of DBT in the data transformation process.

What is DBT?:

DBT stands for Data Build Tool, an open-source software application that redefines the way data teams approach data transformation. It acts as a bridge between data engineering and data analysis, allowing teams to transform, test, and document data workflows efficiently. DBT treats data transformation as a craft, turning SQL queries into testable, deployable, and documentable artifacts.

Diving Deeper into DBT’s Key Features

- Version Control: DBT’s seamless integration with version control systems like Git ensures that every change to the data transformation scripts is tracked, allowing for collaborative development and historical versioning.

- Testing: With DBT, data reliability and accuracy take the forefront. DBT provides a framework for writing and executing tests against your data models, ensuring that the data meets the specified business rules and quality standards.

- Documentation: DBT automatically generates documentation from your data models, making it easier for teams to understand the data’s flow, dependencies, and transformations. This self-documenting aspect of DBT promotes better knowledge sharing and data governance within organizations.

The Transformative Impact of DBT

DBT empowers data teams by bridging the gap between data engineering and analytics, providing a platform that enhances data transformation workflows with efficiency, transparency, and quality. Its ability to treat transformations as code brings software engineering best practices into the data analytics realm, fostering a more collaborative and disciplined approach to data processing.

Implementing DBT in Your Data Stack

Implementing DBT starts with its installation and connecting it to your data warehouse. The next step involves defining your data models, which serve as the foundation for your transformations. DBT supports a wide range of data warehouses and databases, including Snowflake, BigQuery, Redshift, and more, ensuring compatibility and flexibility in various data ecosystems.

Expanding Horizons: DBT’s Applications in Different Industries

DBT’s versatility shines across various industries, from e-commerce to healthcare, finance, and beyond. It streamlines data operations, enabling businesses to harness their data effectively for reporting, analytics, and decision-making. DBT’s ability to manage complex data transformations at scale makes it a valuable asset for any data-driven organization looking to optimize its data workflows.

Conclusion: The Future of Data Transformation with DBT

In the rapidly evolving data landscape, DBT stands out as a tool that not only simplifies data transformation but also elevates it to a level where accuracy, efficiency, and collaboration are paramount. By adopting DBT, organizations can look forward to a future where data is not just a resource but a well-oiled engine driving informed decisions and strategic insights.

Join the DBT Revolution

Embracing DBT means stepping into a world where data transformation is no longer a bottleneck but a catalyst for growth and innovation. Whether you’re a data analyst, engineer, or business leader, DBT offers the tools and community support to transform your data practices. Dive into the DBT community, explore its rich resources, and start your journey towards data excellence.

My Resume

Education

Msc Data Science And Artificial Intelligence

2022 - 2023Training in data science & artificial intelligence methods, emphasizing mathematical and computer science perspectives.

Master In Management

EDHEC Business School (2005 - 2009)English Track Program - Major in Entrepreneurship.

BSc in Applied Mathematics and Social Sciences

University Paris 7 Denis Diderot (2006)General university studies with a focus on applied mathematics and social sciences.

Education

Higher School Preparatory Classes

Lycée Jacques Decour - Paris (2002 - 2004)Classe préparatoire aux Grandes Écoles de Commerce. Science path.

Scientific Baccalaureate

1999 - 2002Mathematics Major

Data Science

Python

SQL

Machine learning libraries

Data visualization tools

DESIGBig Data (Spark, Hive)

Data Analysis

Spreadsheet software

Data visualization tools (Tableau, PowerBI, and Matplotlib)

Statistical software (SAS, SPSS)

SAP Business Objects

Database management systems (SQL, MySQL)

Development

HTML

CSS

JAVASCRIPT

SOFTWARE

Version Control Systems

MLOps

CI/CD

Docker and Kubernetes

AutoML

Model serving frameworks

Prometheus, Grafana

Job Experience

Consulting, Automation and Security

(2019 - Present)Implementation of automated reporting tools via SAP Business Objects, Processing and securing sensitive data (data wrangling, encryption, redundancy), Remote monitoring management solutions via connected objects (IoT) and image processing, Internal pentesting and network security consulting, VPN implementation, Outsourcing of servers

Consulting, E-commerce and Digital Marketing

(2017 - 2019)Consulting in e-commerce and digital marketing (Bangkok area), Booking.com, Airbnb, Agoda online booking management for third parties, SEO in the hotel industry.

Consulting, internal company network

(2016 - 2017)Implementation of corporate networks and virtualization solutions (rack cabling, firewalls, proxmox virtualization), Management of firewalls and internal networks. (pfSense)

Entrepreneurship Experience

Founder, web developer

(2015 - 2020)Programming and maintenance of websites and web applications, Consulting in digitalization and process optimization for local SMEs, Implementation of turnkey e-commerce solutions.

Corporate Banking

Assistant Fund Manager

Credit Portfolio Management - CALYON - 2008● Preparation of committee notes for new ABS/CDO credit derivative investments ● Calculation and measurement of portfolio risk (Value-at-Risk, exotic and vanilla ABS, SWAP, liquidity lines) ● Daily monitoring of credit derivatives portfolio structures (Mark-to-Market, P&L, re-financing) ● Design of risk measurement and decision support tools in VBA.

Credit Risk Analyst

Risk and Controls Department – NATIXIS – ParisStudy of financing files for review by the credit committee (structured finance, commodity trade finance, and car manufacturers) ● Financial analysis, rating, and credit risk analysis of a portfolio of companies ● Financing files studied: from €1m to €1000m

Retail Banking

Assistant Business Account Manager

BNP Paribas - 2006● Writing reports on business plans for small SMEs ● Risk and feasibility Analysis and Decision making ● Negotiation of financing xpackages with applicants

Contact Me

Mehdi Fekih

Data Scientist.I am available for freelance work. Connect with me via this contact form or feel free to send me an email.

Phone: +33 (0) 7 82 90 60 71 Email: mehdi.fekih@edhec.com