Hi, I’m Mehdi Fekih

I am a Data Scientist. Data Engineer. Developer. Home Automation Specialist.My Blog

Configuring Amazon S3 Access Keys Securely for UpdraftPlus (WordPress)

Using Amazon Web Services S3 as remote storage for UpdraftPlus is a common and reliable approach for backing up a WordPress site.

However, many users are confused when AWS warns against long-term access keys and suggests alternative authentication methods.

This article explains the correct and secure way to configure S3 access for UpdraftPlus, why AWS shows those warnings, and what best practices actually apply in a real WordPress environment.

Why AWS Warns About Long-Term Access Keys

AWS strongly encourages modern authentication mechanisms such as:

- IAM Roles

- Temporary credentials (STS)

- Workload identity federation

These are excellent practices when your application runs inside AWS (EC2, ECS, Lambda).

However, classic WordPress hosting does not support IAM roles.

If your WordPress site runs on:

- Shared hosting

- A VPS (DigitalOcean, OVH, Hetzner, etc.)

- On-premise infrastructure

then access keys are the only supported and correct solution.

AWS warnings are contextual, not prohibitions.

The Correct AWS Use Case for UpdraftPlus

When creating an access key in IAM, AWS asks you to select a use case.

✅ Correct choice:

Application running outside AWS

This matches the reality:

- UpdraftPlus is a third-party PHP application

- It runs outside AWS

- It only needs programmatic access to S3

Selecting this option does not weaken security and does not change how credentials work.

It simply helps AWS categorize usage internally.

Secure Architecture Overview

WordPress

└─ UpdraftPlus

└─ IAM User (restricted)

└─ S3 Bucket (private)

Key principle: least privilege.

Step 1 – Create a Dedicated S3 Bucket

Best practices:

- Private bucket (Block all public access)

- Dedicated to backups only

- Optional versioning enabled

- Optional lifecycle rules (auto-delete old backups)

Example bucket name:

my-wp-backups-prod

Step 2 – Create a Dedicated IAM User

Never use:

- Root credentials

- A shared IAM user

- Broad policies like

AmazonS3FullAccess

Create a single-purpose IAM user, for example:

updraftplus-wordpress

Programmatic access only.

Step 3 – Attach a Minimal IAM Policy

This policy allows only what UpdraftPlus needs:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "UpdraftPlusS3Access",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::my-wp-backups-prod",

"arn:aws:s3:::my-wp-backups-prod/*"

]

}

]

}

This prevents:

- Access to other buckets

- Account-wide damage if credentials leak

Step 4 – Generate Access Keys

Generate:

- Access Key ID

- Secret Access Key

Store them securely and never commit them to Git.

Add a description such as: UpdraftPlus – production backups

Step 5 – Configure UpdraftPlus

In WordPress:

- Settings → UpdraftPlus → Settings

- Select Amazon S3

- Enter:

- Access Key ID

- Secret Access Key

- Bucket name

- Region

- Set a bucket subpath (recommended):

wordpress/site-prod/

- Save and test the connection

If it fails, the cause is almost always:

- Wrong region

- Incorrect IAM policy

- Typo in bucket name

Optional Hardening (Recommended)

Lifecycle Rules

Automatically delete old backups:

- Daily: keep 14 days

- Monthly: keep 6 months

This avoids silent storage cost growth.

Server-Side Encryption

Enable default SSE-S3 (AES-256).

No code changes required.

IP Restriction (Advanced)

If your hosting provider has a static IP, you can restrict IAM access to that IP range.

Common Mistakes to Avoid

- Using root access keys

- Granting

AmazonS3FullAccess - Making the bucket public

- Skipping lifecycle rules

- Reusing credentials across multiple sites

Final Verdict

For WordPress + UpdraftPlus:

- Long-term access keys are normal

- AWS warnings are generic

- Least-privilege IAM policies are what actually matter

Used correctly, this setup is secure, stable, and industry-standard.

Aliexpress : The Bundle Deal Problem

Aliexpress : The Bundle Deal Problem

Tired of AliExpress forcing you into “Bundle Deals” when you just want to buy a single item? This free userscript intercepts those sneaky links and redirects you straight to the actual product page.

The Bundle Deal Problem

AliExpress has been increasingly pushing shoppers toward “Bundle Deals” — grouped offers that look attractive but prevent you from purchasing a single item at its regular price. When you click on a product from search results, you land on an intermediate page that pressures you into adding more items to your cart.

Here’s what a bundle link looks like:

aliexpress.com/ssr/300000512/BundleDeals2?productIds=1005008154245742...Instead of the direct product link:

aliexpress.com/item/1005008154245742.htmlThis dark pattern wastes your time and tricks you into spending more than you intended. Let’s fix that.

The Solution: AliExpress Bundle Bypass

This userscript automatically detects Bundle Deal links and converts them into direct product page links. No more hunting for workarounds — the script handles everything silently in the background.

Features

- Instant redirect — If you land on a Bundle page, you’re immediately redirected to the actual product

- Link rewriting — Bundle links in search results are converted in real-time as the page loads

- Click interception — Even if a link wasn’t converted, clicks are caught and redirected

- Debug panel — A visual overlay shows how many links were detected and fixed

- Visual indicators — Converted links get a green outline for confirmation

Installation Guide

The script works with any userscript manager extension. Follow the instructions for your browser below.

🟡 Google Chrome

- Install Tampermonkey from the Chrome Web Store

- Click the Tampermonkey icon in your browser toolbar

- Select “Create a new script…”

- Delete the default template code

- Paste the script code (see below)

- Press Ctrl+S (or Cmd+S on Mac) to save

- Refresh AliExpress — the debug panel should appear in the top-right corner

🟠 Mozilla Firefox

- Install Violentmonkey or Greasemonkey from Firefox Add-ons

- Click the extension icon in your toolbar

- Click the “+” button to create a new script

- Paste the script code

- Save with Ctrl+S

- Navigate to AliExpress and test it out

🔵 Microsoft Edge

- Install Tampermonkey for Edge from the Microsoft Edge Add-ons store

- Click the Tampermonkey icon

- Select “Create a new script…”

- Paste the code and save

🦁 Brave Browser

Brave is Chromium-based, so the process is identical to Chrome. Install Tampermonkey from the Chrome Web Store — it’s fully compatible with Brave.

🍎 Safari (macOS)

Safari requires a paid extension for userscripts. Your options are:

- Userscripts (free, open-source)

- Tampermonkey for Safari (paid)

The Script

Copy the entire code below and paste it into your userscript manager:

// ==UserScript==

// @name AliExpress Bundle Bypass

// @namespace https://github.com/user/aliexpress-bundle-bypass

// @version 3.1.0

// @description Bypass bundle deals and go directly to product pages

// @author Community

// @match *://*.aliexpress.com/*

// @match *://*.aliexpress.ru/*

// @match *://*.aliexpress.us/*

// @grant GM_addStyle

// @run-at document-end

// @license MIT

// ==/UserScript==

(function() {

'use strict';

const processedLinks = new WeakSet();

let isScanning = false;

let scanTimeout = null;

let scannedCount = 0;

let foundCount = 0;

let convertedCount = 0;

// Debug panel

function createDebugPanel() {

const panel = document.createElement('div');

panel.id = 'bundle-bypass-debug';

panel.innerHTML = `

<div style="

position: fixed;

top: 10px;

right: 10px;

background: #1a1a2e;

color: #0f0;

font-family: monospace;

font-size: 12px;

padding: 10px 15px;

border-radius: 8px;

z-index: 999999;

max-width: 350px;

box-shadow: 0 4px 20px rgba(0,255,0,0.3);

border: 1px solid #0f0;

">

<div style="font-weight: bold; margin-bottom: 8px; color: #0ff;">

⚡ BUNDLE BYPASS v3.1

</div>

<div id="bb-status">Active ✓</div>

<div id="bb-stats" style="margin-top: 8px;">

Scanned: <span id="bb-scanned">0</span> |

Bundles: <span id="bb-found">0</span> |

Fixed: <span id="bb-converted">0</span>

</div>

<button id="bb-close" style="position: absolute; top: 5px; right: 10px; background: none; border: none; color: #f00; cursor: pointer;">✕</button>

</div>

`;

return panel;

}

function updateStats() {

const el1 = document.getElementById('bb-scanned');

const el2 = document.getElementById('bb-found');

const el3 = document.getElementById('bb-converted');

if (el1) el1.textContent = scannedCount;

if (el2) el2.textContent = foundCount;

if (el3) el3.textContent = convertedCount;

}

// Redirect if already on a bundle page

const currentUrl = window.location.href;

if (currentUrl.includes('BundleDeals')) {

const match = currentUrl.match(/productIds=(\d+)/);

if (match) {

window.location.replace(`https://www.aliexpress.com/item/${match[1]}.html`);

return;

}

}

function extractProductId(url) {

let match = url.match(/productIds=(\d+)/);

if (match) return match[1];

match = url.match(/x_object_id[%3A:]+(\d+)/i);

if (match) return match[1];

return null;

}

function scanLinks() {

if (isScanning) return;

isScanning = true;

document.querySelectorAll('a[href*="BundleDeals"]').forEach(link => {

if (processedLinks.has(link)) return;

processedLinks.add(link);

scannedCount++;

const productId = extractProductId(link.href);

if (productId) {

foundCount++;

link.dataset.originalBundle = link.href;

link.href = `https://www.aliexpress.com/item/${productId}.html`;

link.classList.add('bb-converted');

convertedCount++;

}

});

updateStats();

isScanning = false;

}

function debouncedScan() {

if (scanTimeout) clearTimeout(scanTimeout);

scanTimeout = setTimeout(scanLinks, 200);

}

// Click interceptor as fallback

document.addEventListener('click', function(e) {

const link = e.target.closest('a');

if (!link || !link.href.includes('BundleDeals')) return;

const productId = extractProductId(link.href);

if (productId) {

e.preventDefault();

e.stopPropagation();

const url = `https://www.aliexpress.com/item/${productId}.html`;

link.target === '_blank' ? window.open(url, '_blank') : window.location.href = url;

}

}, true);

// Mutation observer for dynamically loaded content

const observer = new MutationObserver(mutations => {

if (mutations.some(m => m.target.closest('#bundle-bypass-debug'))) return;

debouncedScan();

});

// Inject CSS for visual feedback

const style = document.createElement('style');

style.textContent = `

a.bb-converted { outline: 3px solid #0f0 !important; outline-offset: 2px !important; }

a[href*="BundleDeals"]:not(.bb-converted) { outline: 3px dashed #ff0 !important; }

`;

document.head.appendChild(style);

// Initialize

function init() {

document.body.appendChild(createDebugPanel());

document.getElementById('bb-close').onclick = () =>

document.getElementById('bundle-bypass-debug').remove();

scanLinks();

observer.observe(document.body, { childList: true, subtree: true });

let polls = 0;

const id = setInterval(() => { scanLinks(); if (++polls >= 5) clearInterval(id); }, 2000);

}

document.readyState === 'loading'

? document.addEventListener('DOMContentLoaded', init)

: init();

})();How to Use

Once installed, the script runs automatically on all AliExpress pages. You’ll notice:

- A debug panel in the top-right corner showing real-time statistics

- A green outline around links that have been successfully converted

- A yellow dashed outline around Bundle links that haven’t been processed yet (rare)

You can close the debug panel by clicking the red ✕ button. The script will continue running in the background.

Troubleshooting

The debug panel doesn’t appear

- Make sure the script is enabled in your userscript manager

- Hard refresh the page with Ctrl+Shift+R (or Cmd+Shift+R on Mac)

- Check that the script is set to run on

aliexpress.com

Counters stay at 0

This is normal if the current page doesn’t contain any Bundle links. Try searching for a product to see the script in action — Bundle Deals typically appear in search results.

The script stopped working

AliExpress frequently updates their website. If the script breaks, open DevTools (F12), inspect a product card link, and check if they still use the BundleDeals pattern in URLs. If the format changed, the script will need to be updated.

Disabling the Debug Panel

If you find the overlay distracting after confirming the script works, you can disable it permanently:

- Open the script in your userscript manager

- Find the

init()function near the bottom - Delete or comment out this line:

document.body.appendChild(createDebugPanel()); - Save the script

How It Works

The script uses three layers of protection to ensure Bundle links are bypassed:

- Instant redirect — If you’re already on a Bundle page, it extracts the product ID and redirects immediately

- DOM scanning — It scans all links on the page and rewrites Bundle URLs to direct product URLs

- Click interception — As a fallback, it captures clicks on Bundle links and redirects them

A MutationObserver watches for dynamically loaded content (infinite scroll, lazy loading) and processes new links as they appear.

Contributing

Found a bug or want to improve the script? Feel free to fork, modify, and share. If AliExpress changes their URL patterns, the key function to update is extractProductId().

Tested on Chrome 120+, Firefox 121+, Edge 120+, Brave 1.61+, and Safari 17+. Last updated: January 2025. Licensed under MIT.

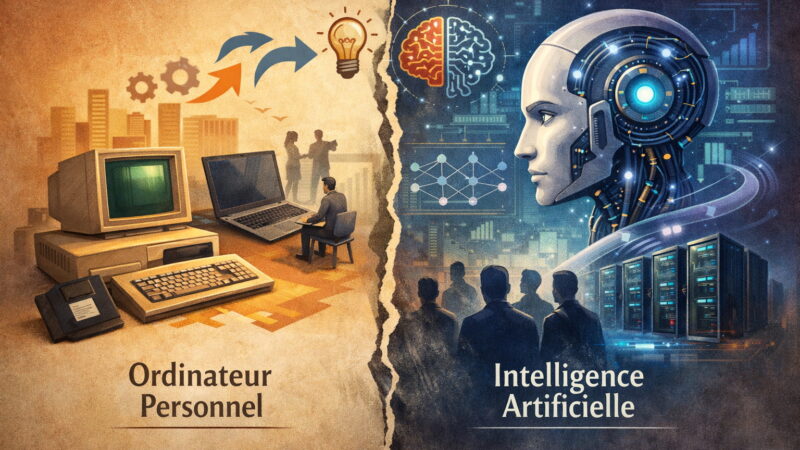

Artificial Intelligence and the Personal Computer: A Valid Comparison, but an Incomplete One

As artificial intelligence (AI) systems rapidly improve and spread across industries, a common argument emerges: the AI shift is comparable to the rise of the personal computer (PC) in the 1980s and 1990s. According to this view, AI represents another technological wave—initially disruptive, eventually normalized—requiring adaptation rather than concern.

While this comparison is not without merit, it has clear limitations. A closer examination reveals that, although the two transformations share similarities, the nature and implications of the AI shift may be fundamentally different.

1. Shared characteristics between the PC and AI revolutions

There are legitimate reasons why the comparison persists.

- General-purpose technologies: Both the PC and AI are applicable across a wide range of sectors.

- Productivity gains: Each promises efficiency improvements through automation and digitalization.

- Initial anxiety: Both sparked fears about job losses and skill obsolescence.

- Learning curve: Adoption in both cases requires new competencies and changes in workflows.

From this perspective, viewing AI as part of a recurring historical pattern is understandable.

2. Passive tools versus active systems

A key difference lies in the nature of the technology itself.

- The personal computer is fundamentally a passive tool. It executes explicit instructions provided by a human user.

- Modern AI systems function as active systems. They generate content, infer patterns, and can operate semi-autonomously.

This distinction reshapes the human role. While PCs extend human capabilities, AI systems can, in some cases, perform tasks independently from end to end.

3. The type of work affected

Earlier waves of automation primarily targeted:

- physical labor,

- repetitive administrative tasks.

AI increasingly impacts work traditionally associated with human cognition:

- writing,

- analysis,

- software development,

- translation,

- design.

The PC required human judgment to interpret and apply information. AI systems increasingly operate within that interpretive layer, raising different questions about task allocation.

4. Speed of change and adoption

The tempo of transformation also differs significantly.

- Personal computers spread gradually over several decades.

- AI systems evolve through rapid iteration cycles, with noticeable capability jumps in months rather than years.

This acceleration compresses the time available for workers, institutions, and education systems to adapt incrementally.

5. Access, infrastructure, and concentration

The PC contributed to a broad democratization of computing:

- relatively affordable hardware,

- open development ecosystems,

- decentralized innovation.

Contemporary AI relies more heavily on:

- large-scale infrastructure,

- massive datasets,

- substantial capital investment.

As a result, questions about centralization of technological and economic power play a more prominent role than they did during the PC era.

6. Rethinking the idea of “adaptation”

The claim that one can simply “adapt” assumes that skills, once acquired, remain valuable long enough to justify the investment.

In the context of AI:

- certain skills may become obsolete quickly,

- continuous learning becomes less stable and more fragmented.

Adaptation remains possible, but it may no longer offer the same long-term security it once did.

7. A helpful analogy—with limits

Comparing AI to the personal computer can help reduce panic and situate innovation within historical precedent. However, the analogy becomes insufficient when examining deeper structural effects.

The PC reshaped how people worked.

AI increasingly challenges which tasks require human involvement at all.

Conclusion

The comparison between artificial intelligence and the personal computer is neither entirely wrong nor fully adequate. It highlights shared dynamics of technological adoption while obscuring meaningful differences in system behavior, speed of change, and economic structure.

Rather than viewing AI as a simple repetition of past technological shifts, it may be more accurate to see it as a transformation whose long-term implications—for work, skills, and value creation—are still unfolding.

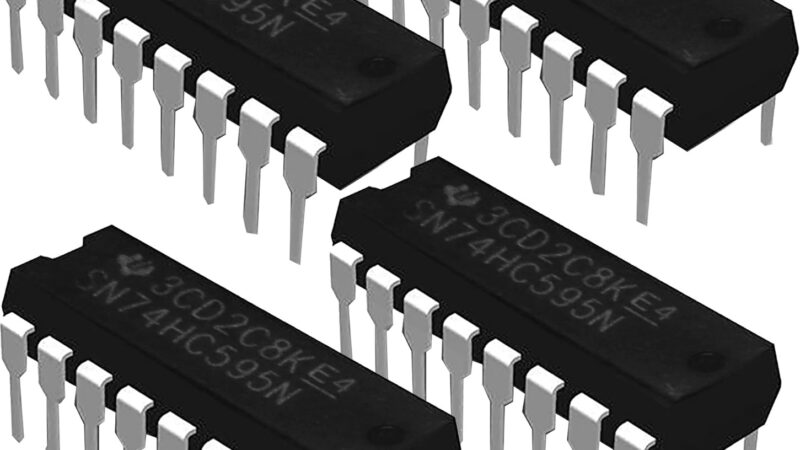

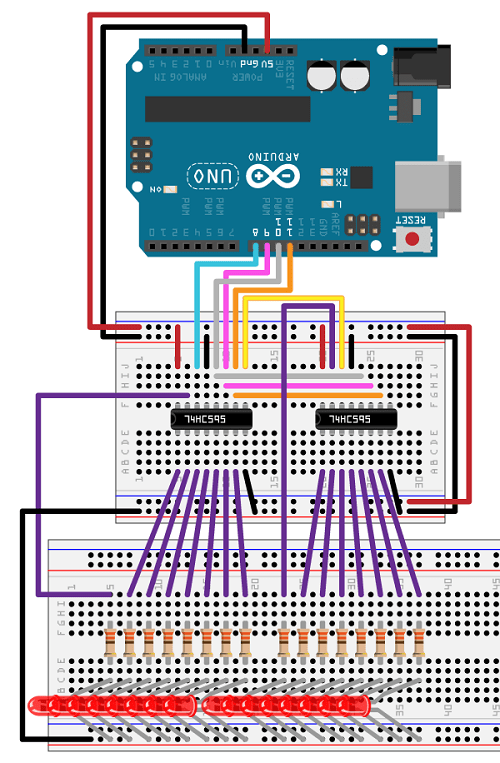

SIPO and PISO shift registers with Arduino and ESP (ESP8266 / ESP32)

When working with Arduino, ESP8266 or ESP32, you quickly hit a simple limit: not enough GPIO pins.

Instead of stacking boards or using complex expanders, there is a very clean and proven solution: shift registers.

The two main types are:

- SIPO – Serial In, Parallel Out

- PISO – Parallel In, Serial Out

Why use shift registers?

- Save GPIO pins

- Clean wiring

- Easy to scale

- Very cheap

- Used everywhere in real hardware (industry, vending, panels)

With only 3 pins, you can manage 8, 16, 32+ inputs or outputs.

SIPO – Serial In, Parallel Out

What it does

You send data bit by bit (serial), and the chip exposes them all at once on parallel outputs.

Common chip

74HC595

- 8 digital outputs

- Can be daisy-chained

- Very reliable

- Fast enough for almost any project

Important pins

| Pin | Role |

|---|---|

| DS | Serial data |

| SH_CP | Clock |

| ST_CP | Latch |

| Q0–Q7 | Outputs |

| OE | Output enable (optional) |

| MR | Reset (optional) |

Arduino example – driving 8 LEDs

int dataPin = 11;

int clockPin = 13;

int latchPin = 10;

void setup() {

pinMode(dataPin, OUTPUT);

pinMode(clockPin, OUTPUT);

pinMode(latchPin, OUTPUT);

}

void loop() {

digitalWrite(latchPin, LOW);

shiftOut(dataPin, clockPin, MSBFIRST, B10101010);

digitalWrite(latchPin, HIGH);

delay(500);

}

Typical SIPO use cases

- LEDs

- Relays

- MOSFET boards

- 7-segment displays

- Control panels

- Power boards

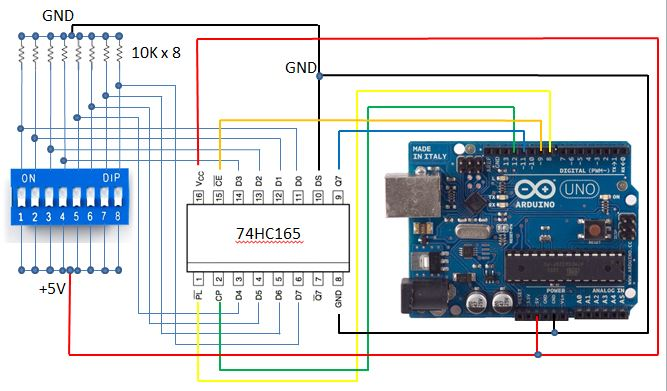

PISO – Parallel In, Serial Out

What it does

Reads many inputs at once, then sends their state serially to the microcontroller.

Common chip

74HC165

- 8 digital inputs

- Can be chained

- Very simple timing

Important pins

| Pin | Role |

|---|---|

| PL | Parallel load |

| CP | Clock |

| Q7 | Serial output |

| D0–D7 | Inputs |

Arduino example – reading 8 buttons

int loadPin = 8;

int clockPin = 12;

int dataPin = 11;

void setup() {

pinMode(loadPin, OUTPUT);

pinMode(clockPin, OUTPUT);

pinMode(dataPin, INPUT);

}

byte readInputs() {

digitalWrite(loadPin, LOW);

delayMicroseconds(5);

digitalWrite(loadPin, HIGH);

return shiftIn(dataPin, clockPin, MSBFIRST);

}

void loop() {

byte buttons = readInputs();

}

SIPO vs PISO

| Feature | SIPO (74HC595) | PISO (74HC165) |

|---|---|---|

| Direction | Output | Input |

| GPIO needed | 3 | 3 |

| Chainable | Yes | Yes |

| Speed | High | High |

| Typical use | LEDs, relays | Buttons, switches |

Arduino vs ESP considerations

Arduino (5V)

- Works perfectly with HC / HCT chips

- Very forgiving

ESP8266 / ESP32 (3.3V)

- Prefer 74HCT or power the chip at 3.3V

- Avoid floating OE / MR pins

- Logic levels matter more

In practice, 74HC often works, but it’s not guaranteed in all cases.

Daisy-chaining

You can chain registers easily:

- Q7’ → DS for SIPO

- Q7 → DS for PISO

- Same clock and latch

Examples:

- 4 × 74HC595 → 32 outputs

- 2 × 74HC165 → 16 inputs

Alternatives

| Chip | Type | Why use it |

|---|---|---|

| MCP23017 | I²C GPIO | Interrupts |

| PCF8574 | I²C GPIO | Very simple |

| TLC5940 | PWM LED | Brightness control |

| ULN2003 | Driver | Current handling |

Still, SIPO / PISO are hard to beat for simplicity and reliability.

When SIPO / PISO are the right choice

Good choice if you want:

- Simple hardware

- Deterministic timing

- No complex bus

- Easy scaling

Not ideal if you need:

- Analog inputs

- Per-pin interrupts

- Complex feedback

Conclusion

SIPO and PISO shift registers are basic but extremely powerful tools.

They are cheap, fast, reliable, and perfectly suited for Arduino and ESP projects.

If you are building:

- a custom board

- a control panel

- a vending machine

- home automation hardware

This should be one of your first tools.

Evaluating iSCSI vs NFS for Proxmox Storage: A Comparative Analysis

In the realm of virtualization, selecting the appropriate storage protocol is crucial for achieving optimal performance and efficiency. Proxmox VE, a prominent open-source platform for virtualization, offers a variety of storage options for Virtual Machines (VMs) and containers. Among these, iSCSI and NFS stand out as popular choices. This article aims to delve into the strengths and weaknesses of both iSCSI and NFS within the context of Proxmox storage, providing insights to help IT professionals make informed decisions.

Introduction to iSCSI and NFS

iSCSI (Internet Small Computer Systems Interface) is a storage networking standard that enables the transport of block-level data over IP networks. It allows clients (initiators) to send SCSI commands to SCSI storage devices (targets) on remote servers. iSCSI is known for its block-level access, providing the illusion of a local disk to the operating system.

NFS (Network File System), on the other hand, is a distributed file system protocol allowing a user on a client computer to access files over a network in a manner similar to how local storage is accessed. NFS operates at the file level, providing shared access to files and directories.

Performance Considerations

When evaluating iSCSI and NFS for Proxmox storage, performance is a critical factor.

iSCSI offers the advantage of block-level storage, which generally translates to faster write and read speeds compared to file-level storage. This can be particularly beneficial for applications requiring high I/O performance, such as databases. However, the performance of iSCSI can be heavily dependent on network stability and configuration. Proper tuning and a dedicated network for iSCSI traffic can mitigate potential bottlenecks.

NFS might not match the raw speed of iSCSI in block-level operations, but its simplicity and efficiency in handling file operations make it a strong contender. NFS v4.1 and later versions introduce performance enhancements and features like pNFS (parallel NFS) that can significantly boost throughput and reduce latency in environments with high-demand file operations.

Scalability and Flexibility

NFS shines in terms of scalability and flexibility. Being a file-based storage, NFS allows for easier management, sharing, and scaling of files across multiple clients. Its stateless protocol simplifies recovery from network disruptions. For environments requiring seamless access to shared files or directories, NFS is often the preferred choice.

iSCSI, while scalable, may require more intricate management as the storage needs grow. Each iSCSI target appears as a separate disk, and managing multiple disks across various VMs can become challenging. However, iSCSI’s block-level access provides a high degree of flexibility in terms of partitioning, file system choices, and direct VM disk access, which can be crucial for certain enterprise applications.

Ease of Setup and Management

From an administrative perspective, the ease of setup and ongoing management is another vital consideration.

NFS is generally perceived as easier to set up and manage, especially in Linux-based environments like Proxmox. The configuration is straightforward, and mounting NFS shares on VMs can be accomplished with minimal effort. This ease of use makes NFS an attractive option for smaller deployments or scenarios where IT resources are limited.

iSCSI requires a more detailed setup process, including configuring initiators, targets, and often dealing with CHAP authentication for security. While this complexity can be a barrier to entry, it also allows for fine-grained control over storage access and security, making iSCSI a robust choice for larger, security-conscious deployments.

Security and Reliability

Security and reliability are paramount in any storage solution.

iSCSI supports robust security features like CHAP authentication and can be integrated with IPsec for encrypted data transfer, providing a secure storage solution. The block-level access of iSCSI, while efficient, means that corruption on the block level can have significant repercussions, necessitating strict backup and disaster recovery strategies.

NFS, being file-based, might have more inherent vulnerabilities, especially in open networks. However, NFSv4 introduced improved security features, including Kerberos authentication and integrity checking. File-level storage also means that corruption is usually limited to individual files, potentially reducing the impact of data integrity issues.

Conclusion

Choosing between iSCSI and NFS for Proxmox storage depends on various factors, including performance requirements, scalability needs, administrative expertise, and security considerations. iSCSI offers high performance and fine-grained control suitable for intensive applications and large deployments. NFS, with its ease of use, flexibility, and file-level operations, is ideal for environments requiring efficient file sharing and simpler management. Ultimately, the decision should be guided by the specific needs and constraints of the deployment environment, with a thorough evaluation of both protocols’ strengths and weaknesses.

https://www.youtube.com/watch?v=2HfckwJOy7A

How to Resolve Proxmox VE Cluster Issues by Temporarily Stopping Cluster Services

- If you’re managing a Proxmox VE cluster, you might occasionally encounter situations where changes to cluster configurations become necessary, such as modifying the Corosync configuration or addressing synchronization issues. One effective method to safely make these changes involves temporarily stopping the cluster services. In this article, we’ll walk you through a solution that involves stopping the

pve-clusterandcorosyncservices, and starting the Proxmox configuration filesystem daemon in local mode.

Understanding the Components

Before diving into the solution, it’s crucial to understand the role of each component:

- pve-cluster: This service manages the Proxmox VE cluster’s configurations and coordination, ensuring that all nodes in the cluster are synchronized.

- corosync: The Corosync Cluster Engine provides the messaging and membership services that form the backbone of the cluster, facilitating communication between nodes.

- pmxcfs (Proxmox Cluster File System): This is a database-driven file system designed for storing cluster configurations. It plays a critical role in managing the cluster’s shared configuration data.

Step-by-Step Solution

When you need to make changes to your cluster configurations, follow these steps to ensure a safe and controlled update environment:

- Prepare for Maintenance: Notify any users of the impending maintenance and ensure that you have a backup of all critical configuration files. It’s always better to be safe than sorry.

- Stop the pve-cluster Service: Begin by stopping the

pve-clusterservice to halt the synchronization process across the cluster. This can be done by executing the following command in your terminal:

systemctl stop pve-cluster - Stop the corosync Service: Next, stop the Corosync service to prevent any cluster membership updates while you’re making your changes. Use this command:

systemctl stop corosync - Start pmxcfs in Local Mode: With the cluster services stopped, you can now safely start the

pmxcfsin local mode using the-lflag. This allows you to work on the configuration files without immediate propagation to other nodes. - Make Your Changes: With

pmxcfsrunning in local mode, proceed to make the necessary changes to your cluster configuration files. Remember, any modifications made during this time should be carefully considered and double-checked for accuracy. - Restart the Services: Once your changes are complete and verified, restart the

corosyncandpve-clusterservices to re-enable the cluster functionality.

systemctl start corosync systemctl start pve-cluster - Verify Your Work: After the services are back up, it’s essential to verify that your changes have been successfully applied and that the cluster is functioning as expected. Use Proxmox VE’s built-in diagnostic tools and commands to check the cluster’s status.

Conclusion

Modifying cluster configurations in a Proxmox VE environment can be a delicate process, requiring careful planning and execution. By temporarily stopping the pve-cluster and corosync services and leveraging the local mode of pmxcfs, you gain a controlled environment to make and apply your changes safely. Always ensure that you have backups of your configuration files before proceeding and thoroughly test your changes to avoid unintended disruptions to your cluster’s operation.

Remember, while this method can be effective for various configuration changes, it’s crucial to consider the specific needs and architecture of your cluster. When in doubt, consult with Proxmox VE documentation or seek assistance from the community or professional support.

What I Can Do For You

Data Science

Unlocking insights and driving business growth through data analysis and visualization as a freelance data scientist.

Data Analysis

Helping businesses make informed decisions through insightful data analysis as a freelance data analyst.

Website Development

Bringing your online presence to life with customized website development solutions as a freelance developer.

Home Automation

Transforming your living space into a smart home with custom home automation solutions as a freelance home automation expert.

Docker & Server

Optimizing your software development and deployment with Docker and server management as a freelance expert

Consultancy

Identification of scope, assessment of feasibility, cleaning and preparation of data, selection of tools and algorithms.

My Portfolio

My Resume

Education

Msc Data Science And Artificial Intelligence

2022 - 2023Training in data science & artificial intelligence methods, emphasizing mathematical and computer science perspectives.

Master In Management

EDHEC Business School (2005 - 2009)English Track Program - Major in Entrepreneurship.

BSc in Applied Mathematics and Social Sciences

University Paris 7 Denis Diderot (2006)General university studies with a focus on applied mathematics and social sciences.

Education

Higher School Preparatory Classes

Lycée Jacques Decour - Paris (2002 - 2004)Classe préparatoire aux Grandes Écoles de Commerce. Science path.

Scientific Baccalaureate

1999 - 2002Mathematics Major

Data Science

Python

SQL

Machine learning libraries

Data visualization tools

DESIGBig Data (Spark, Hive)

Data Analysis

Spreadsheet software

Data visualization tools (Tableau, PowerBI, and Matplotlib)

Statistical software (SAS, SPSS)

SAP Business Objects

Database management systems (SQL, MySQL)

Development

HTML

CSS

JAVASCRIPT

SOFTWARE

Version Control Systems

MLOps

CI/CD

Docker and Kubernetes

AutoML

Model serving frameworks

Prometheus, Grafana

Job Experience

Consulting, Automation and Security

(2019 - Present)Implementation of automated reporting tools via SAP Business Objects, Processing and securing sensitive data (data wrangling, encryption, redundancy), Remote monitoring management solutions via connected objects (IoT) and image processing, Internal pentesting and network security consulting, VPN implementation, Outsourcing of servers

Consulting, E-commerce and Digital Marketing

(2017 - 2019)Consulting in e-commerce and digital marketing (Bangkok area), Booking.com, Airbnb, Agoda online booking management for third parties, SEO in the hotel industry.

Consulting, internal company network

(2016 - 2017)Implementation of corporate networks and virtualization solutions (rack cabling, firewalls, proxmox virtualization), Management of firewalls and internal networks. (pfSense)

Entrepreneurship Experience

Founder, web developer

(2015 - 2020)Programming and maintenance of websites and web applications, Consulting in digitalization and process optimization for local SMEs, Implementation of turnkey e-commerce solutions.

Corporate Banking

Assistant Fund Manager

Credit Portfolio Management - CALYON - 2008● Preparation of committee notes for new ABS/CDO credit derivative investments ● Calculation and measurement of portfolio risk (Value-at-Risk, exotic and vanilla ABS, SWAP, liquidity lines) ● Daily monitoring of credit derivatives portfolio structures (Mark-to-Market, P&L, re-financing) ● Design of risk measurement and decision support tools in VBA.

Credit Risk Analyst

Risk and Controls Department – NATIXIS – ParisStudy of financing files for review by the credit committee (structured finance, commodity trade finance, and car manufacturers) ● Financial analysis, rating, and credit risk analysis of a portfolio of companies ● Financing files studied: from €1m to €1000m

Retail Banking

Assistant Business Account Manager

BNP Paribas - 2006● Writing reports on business plans for small SMEs ● Risk and feasibility Analysis and Decision making ● Negotiation of financing xpackages with applicants

Contact Me

Mehdi Fekih

Data Scientist.I am available for freelance work. Connect with me via this contact form or feel free to send me an email.

Phone: +33 (0) 7 82 90 60 71 Email: mehdi.fekih@edhec.com